As government leaders scramble to explore and agree upon guardrails for the artificial intelligence industry, the tech sector is already acting through a newly announced oversight entity.

The Frontier Model Forum

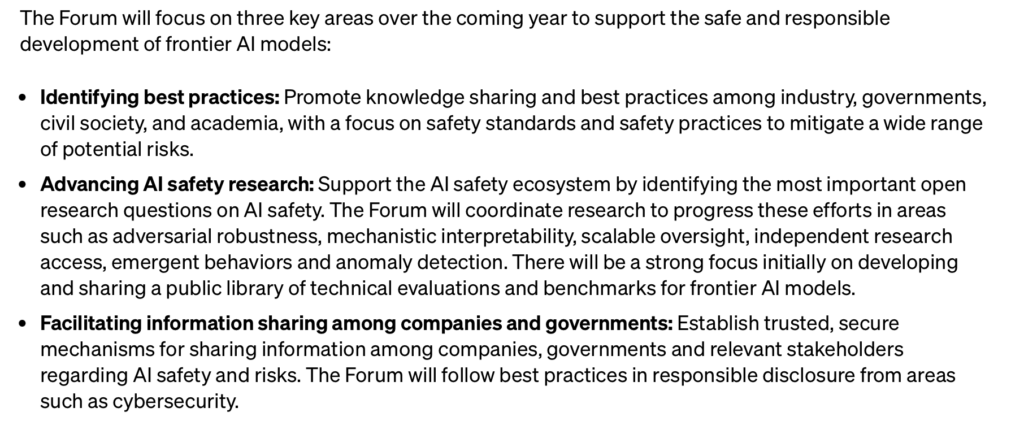

This week, four players in generative AI came together to announce an umbrella organization to ensure the safe and responsible development of frontier AI models. The four companies involved -Anthropic, Google, Microsoft, and OpenAI – announced the Frontier Model Forum. Its an industry body focused on ensuring safe and responsible development of frontier AI models:

More Regulation Progress

This news came out days after the mega tech leaders in the space, including Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI, committed to other voluntary safeguards as part of a government initiative. A highlight of the agreement includes watermarking all forms of content, from text, images, audios, to videos generated by AI so that users will know when the technology has been used, according to Reuters.

It also includes a commitment “to allow outside experts to test their systems before they are released to the public. A red team exercise is designed to simulate what could go wrong with a given technology – such as a cyberattack or its potential to be used by malicious actors – and allows companies to proactively identify shortcomings and prevent negative outcomes,” according to CNN.

Experts say some of the agreements made with the White House were already standard practice in the industry. They are also not enforceable. This is why lawmakers continue to feel pressure mount to act quickly to regulate the industry. Later this year, the EU is expected to adopt the first series of regulatory steps with the rest of the world watching and likely to follow. The ultimate concern for global leaders is creating safeguards across the world, so that countries like China and Russia don’t misuse the capabilities for malice.

I discuss the importance of regulation and impact of artificial intelligence on a global economy here in a panel interview with leaders from the World Economic Forum, Hewlett Packard Enterprise and Crusoe Energy:

With the rapid development of artificial intelligence and the growing number of companies and countries racing to explore its possibility, the Frontier Model Forum is considered a positive start with private industry stepping in before the government does.

Trusting tech

Still, trust factor is a concern for lawmakers and consumer advocates when big tech takes the helm. Former Google CEO, Eric Schmidt, recently made the case for tech leading the AI regulation effort, saying to NBC: “There’s no one in the government who can get it right. But the industry can roughly get it right.”

Yet, studies show that public trust of tech companies and their leadership is waning. Once the most trusted industry amongst all business sectors, the tech industry’s reputation fell to 9th overall, a 2021 survey by the Wall Street Journal and Deloitte showed. Cybersecurity breaches, data leaks and studies showing the harm of social media on mental health, for example, are all reasons advocates push for a collaborative and broad approach to AI regulation – and fast.